The What

Following the standard definition, benchmarking is the process of measuring the performance of an application individually, or in contrast to other competitor applications in order to extract and analyse performance metrics.

Nowadays, benchmarking is critical in the development cycle, mainly due to the fact that for one application there are a multitude of copycats with very similar content so the users tend to go with the one that has the best performance optimizations.

The rising and highly competitive market “demands” top performance from an application in order to strive and get an increasing pool of users engaged.

Moreover, the statistics from top-rated projects, of different genres, are showing that the highest grossed revenue is mostly coming from mid-end devices and not the high-end ones, meaning that this game of cat and mouse implies constant adaptability.

A veteran fan of the game, will most likely ignore functionality or visual bugs along the way but when it comes to the stress of the device (high battery consumption, overheating, low FPS), they'll most likely stop playing the game.

In the below article we'll go through a presentation outlining Amber's take on the whole process, the most relevant metrics that confirm/infirm performance aspects and also the strategies that we have created in order to integrally review all of the technical areas from a performance standpoint.

The How

The service covers 4 types of testing based on 3 essential phases.

Each of the testing types has its own scope and can be used as a single service or as a full package covering all of the project needs from a performance perspective.

These services can be part of a continuous engagement, where our team works closely with the Development team while making sure that the integrations aren't negatively influencing the overall game performance and/or stability.

Also, based on availability, our team can cover these sub-services as ad-hoc requests whenever the technical expertise is required for performance validation.

Testing Types

- Single build validation: this is a preliminary test with the purpose of having contrast for subsequent tests. Our target here would be gathering data, that we can use in comparison to the next builds that will come in testing.

- Build 2 build comparison: this is the most common test among our partners. The focus here would be to extract, analyse and compare data between a regular build and another build with added content.

- Device coverage: this type of testing is performed on a large pool of devices, covering a multitude of hardware-firmware combinations. We recommend this test prior to soft-launch as it's a very good method of identifying the range of devices on which the application can run on and blacklist the ones that are not in accordance withthe requirements. This is a very good leverage of reducing negative reviews/feedback and avoid retention decreases generated by the affected pool of devices.

- Competitive benchmarking: this type of testing is of great importance as it creates visibility on how the competitor applications are working in comparison to the ones that we're testing. Amber QA recommends executing these tests at least one time per quarter so that we have clear visibility on the added optimizations and share the data with the Developers.

Testing Phases

Phase 1 - Planning & Setup

- Discuss the targeted date and confirm no overlaps are present;

- Setup the hardware and the secured network;

- Prepare the targeted devices - Confirm the same parameters are met on all of them (100% battery life, auto adaptive brightness, all are placed on a neutral environment that will not influence the temperature, etc.)

- Reset the devices pre and post request kick-off so that the builds are only seen, reviewed by the dedicated team;

- Create a customized template based on the existing needs;

- Confirm the external PoC that would help out the benchmarking team in case if they encounter blocker or critical bugs that would hinder testing;

- Discuss the testing instructions that would target the areas of focus;

- Run a smoke & sanity check on the builds making sure that they're bootable and functional before actually starting the scheduled tests;

Phase 2 - Execution

- Split the targeted devices / platforms so that the tests are executed in parallel and all involved parties can discuss on the identified issues as soon as they're spotted;

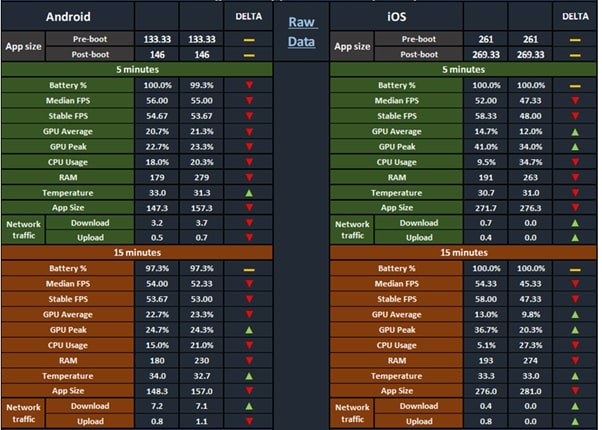

- Extract the metrics based on set timeframes, such as (5 minutes - 15 minutes - 30 minutes - 1h of gameplay) or following pre-set instructions that may vary in durations;

- The raw data for the extracted metrics will contain 15-20 values per metric and the specialized testers will calculate averages for each of the targeted areas;

- In case of high impact bugs in regards to performance and/or functionality, a bug report containing, reproduction steps/rate, attached proof and relevant logs will be created and shared.

- In case of issues that are device specific, the specialized testers will retry the tests on 2-3 devices with the same specifications as the affected device and gather more data.

Phase 3 - Reporting & Feedback

- Add the extracted & analysed data in the customized template;

- Add testing notes with an overview on the performed run, pinpointing the identified issues and/or critical areas;

- Attach videos/pictures/logs and reproduction steps for helping out the development team;

- Share the report with all parties;

- Address any questions that arise after the report is shared.

Under the Hood

Technical Metrics

“system of measurement used for accurately checking hardware stress”

FPS (Frames per Second) - total frames rendered by a game in one second - rendering is done at 30 (or lower) and 60 FPS (or lower), depending on game genre.

This is one of the most relevant metrics that validates overall game performance

RAM (Random Access Memory) - short term storage that holds data used by the CPU and/or active applications.

More storage = more space for game assets

CPU (Central Processing Unit) - circuit that handles the majority of the phone's functions such game instructions & users` input processing;

GPU (Graphics Processing Unit) - circuit that handles graphical/visual related operations and acts as a load reduction for the CPU;

Boot times - total time spent performing various operations such as: game boot, menu to sub-menu transitions, dialog display, game lobby loading, etc.

Time is important, and nobody wants to play a game that loads up in 3 minutes, do you?

App size - total size that the game occupies pre before assets are downloaded, post boot (after all assets are downloaded and during specific gameplay time.)

Nowadays storage space isn't as problematic as it was in the past, yet, there are still a lot of users that hold on to photos, videos & multiple applications on their device, rather than using a cloud solution, so having a clear overview in regards to how much storage space the app uses always comes in handy.

Battery drain - battery percentage during targeted gameplay.

Most of the graphically rich games are directly affecting the battery life of the device, which is a big deal breaker for the any of the players.

Our variety of devices with different hardware/firmware combinations enables us to accurately review battery stress that a regular user would encounter.

Device temperature - total degrees (Celsius) during gameplay.

A very common issue that we've been seeing in testing is device overheating which, over time, will lead to battery degradation or SoC wear and tear.

Network traffic - validating download and upload traffic during gameplay sessions.

A lot of users are playing games on cellular data (subway, bus, trains etc.) so we always need to make sure that the app isn't causing big holes in their subscription plans.

Having a look on the list of metrics the temptation of tracking all of them is high but the concept of “less is more” applies harmoniously.

Our recommendation would be to start with the critical areas that require optimizations that can be added fairly quick and efficiently and as soon as we get those out of the way we can address the remaining sections that require tweaks.

As an example, the Truecaller application (500M+ downloads, 4.5 stars, 12M reviews) managed to obtain a 30% increase in DAU/MAU just by adding a mix of new features and by improving their launch speed.

Also, big names in the industry such as King & Rovio, focus a lot on improving technical metrics in order to maintain an expanded community that continues to enjoy their games on low & mid-end devices in 2020 also.

Best Practices for Developers

- Include benchmarking in the development process as early as possible;

- Discuss the relevant metrics early on and focus on the critical aspects instead of targeting all of them concomitantly - less is more;

- Run performance benchmarking prior to any major content release;

- Make use of the benchmarking team and validate any optimizations as soon as they're implemented;

- Include a liaison that acts as support for the benchmarking team in order to address all of the potential build issues in an efficient manner;

- Create a tracker based on the development milestones in order to avoid scheduled fixes or maintenance work being done during the run;

Benefits

Identify Bottlenecks, performance Gaps & address critical aspects before they become problematic

By reviewing and validating the new content prior to its release to the public will be a high contributing factor to the delivered product but also to the confidence of all included parties (Stakeholders - Development - QA - End User).

One of the main roles of the Benchmarking team, along with the other Specialised QA services, is to prevent the snowball effect from ever happening. We act as a support team that finds the spark before firefighting becomes a daily practice.

Having the performance benchmarking included in the development process will enable us to identify issues in incipient stages and you to address them before a negative resonating impact is starting to occur among the user pool.

Efficient Development

A multitude of development teams are struggling with issues that slip through the cracks and become troublesome while evolving along with the application phases, instead of focusing their efforts on developing new content, hence missing deadlines, delaying releases, failing submissions, etc.

A big majority of junior game development companies spend the most of their time working on new content and features but once the popularity explodes the trend of getting negative reviews/feedback increases exponentially as well.

Why is that happening? — The answer is easy, increasing the user pool also means increasing the device pool on which the application is installed, and not all of the users have high-end devices at their disposal.

Increase end-user satisfaction and stimulate retention while finding out the gaps between your product and a competitor product.

Benchmarking, or better said, the device coverage part of it, is an essential tool that can be used to review performance on a high number of devices (low / mid / high end) with various hardware - firmware combinations validating how they cope with technical stress over extended gameplay time.

In other words, we can know in advance what/where are the performance gaps and more importantly, have a clear idea in mind in regards to what devices will be supported outside of the OS version limitations.

In most cases, early device blacklisting will maintain the negative reviews and/or feedback to a minimum.

But why stop here, besides of the multitude of devices that can be checked during device coverage, we also have the option of performing tests on the same device but running on different OS versions - Our QA teams are constantly reporting bugs that are OS specific.

Example:

- iPhone XS Max - OS 11.x - crashes after 15 minutes of continuous gameplay

- iPhone XS MAX - OS 12.x - no crash is reported after extended gameplay time

Now imagine targeting the Top 30 devices that bring in the most revenue, running on 3 different OS versions - by including ongoing performance review, all of them can and will be validated long before the users start seeing these issues on the Live builds.

More and more senior game development companies are beginning to see the essential role of benchmarking and use it along with functional QA in order to validate their products and increase coverage in an efficient manner by properly checking the implementation of new content before the application reaches the general public.

Whenever an application will start hanging, crashing or have a noticeable decrease in performance, this will affect all of the other metrics.

New content increases popularity for the moment but an optimized game will retain users for longer periods of time.

Do you remember that nice pair of sneakers that you got for your birthday?

What if they would tear up after wearing them for one week - that wouldn't be a very nice experience, and most likely you won't be choosing that brand when getting a new pair.

Hardware stress on the users devices is causing a similar impact and we wouldn't want that to happen since this will lead to a user pool decrease, a bunch of negative reviews and a negative wall of text the app store.

From a psychological perspective, we're prone to recall negative emotions/memories much easier than positive ones, so in our domain of work we're constantly on thin ice, hence our need of constantly adapting to all of the incoming factors.

Fortunately, we have all of the means at our disposal to make sure that all applications that go through the performance benchmark cycles are fully validated and ready to be enjoyed by the community.

Template Samples